Generative Models

“I combine magic & science to create illusions”

- Marco Tempest

What are Generative Models?

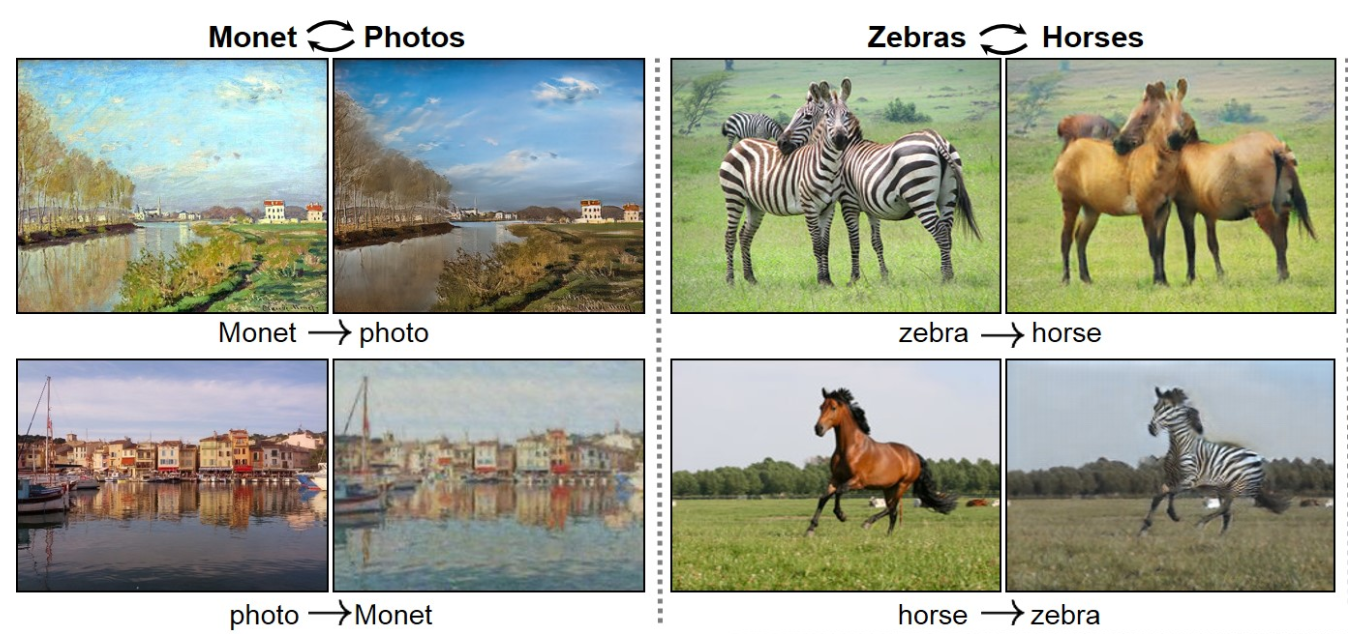

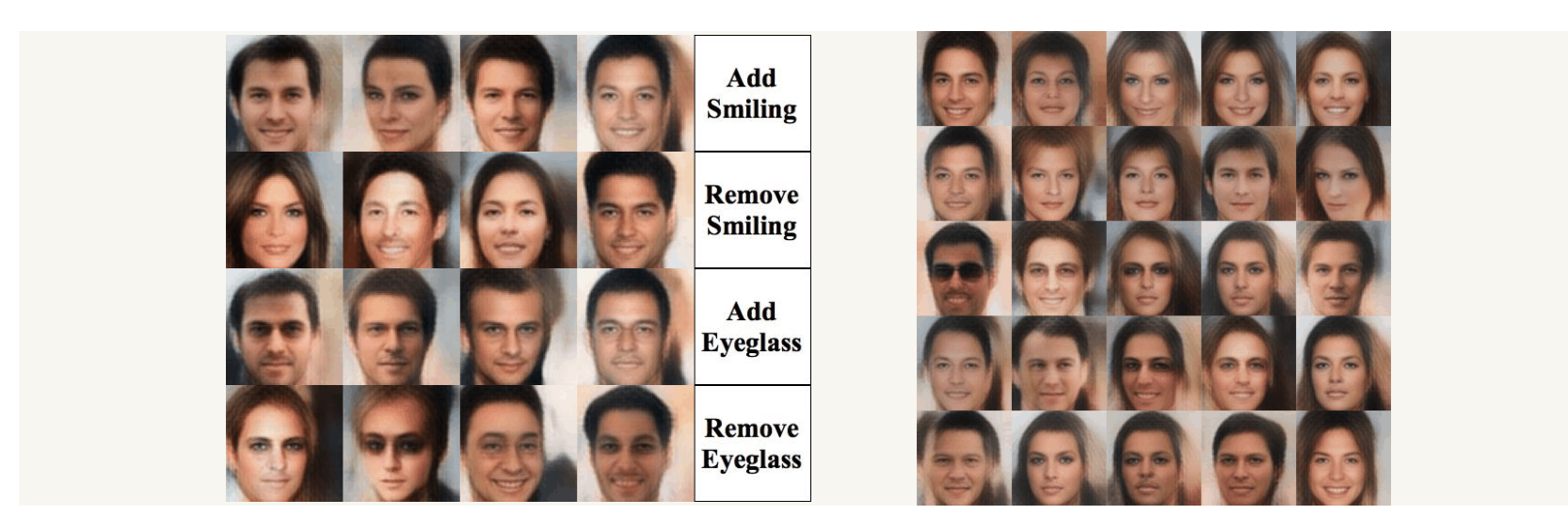

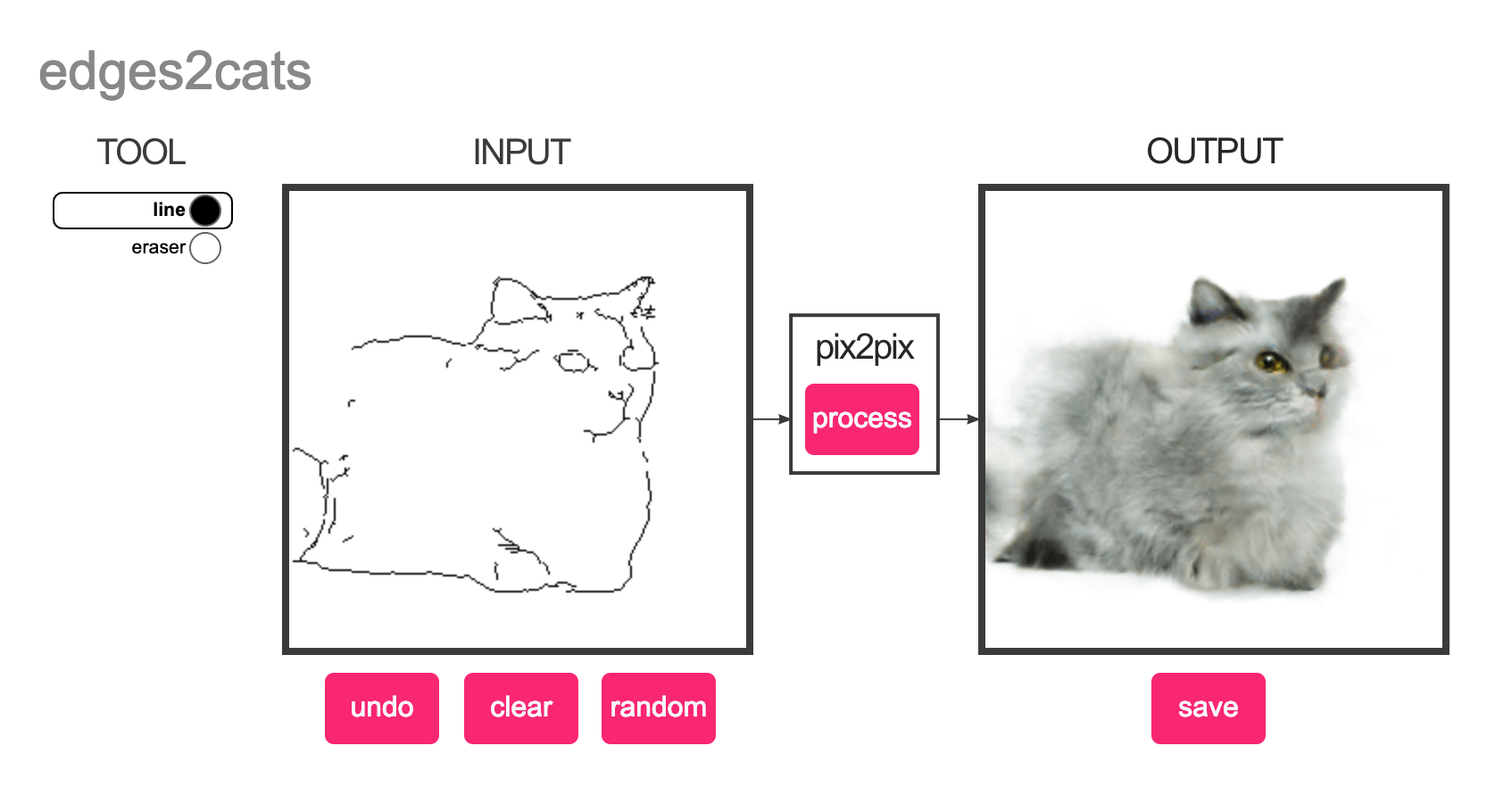

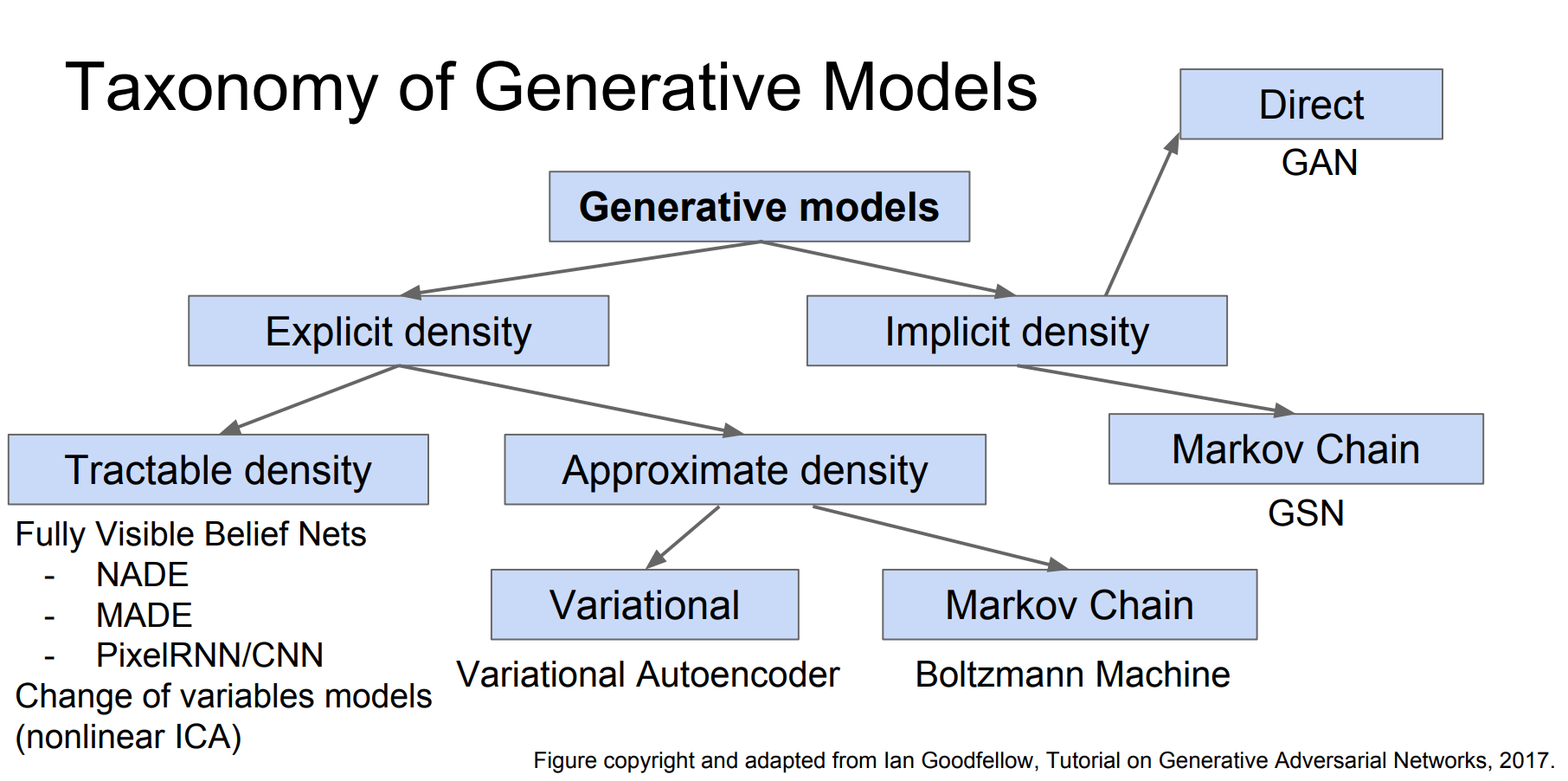

Generative models can be described in ML terms as learning any kind of data distribution using unsupervised learning. Some examples that you might have seen include removing watermarks, transforming zebra into horses (and vice versa), and creating pictures of people who don't exist, among others. When I started diving in to this field, the range of methods, as well as what they could do, was confusing to me. After alot of research the simple taxonomy developed by Ian Goodfellow remains the most valuable overarching view that has helped me place structure my understanding of the range of models & techniques. Since this topic is so broad, and I couldn't do it justice with just one blog post, I have decided to embrace it and do it in 5. This is the first.

So, what are Generative Models really then?

So let's look at this question from a number of viewpoints.

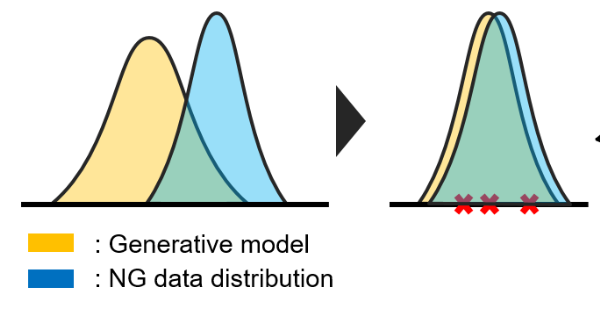

In ML terms, all types of generative models aim at learning the true data distribution of the training set so as to generate new data points with some variations. So from that point, let's figure out this true distribution, and we can now we're off the the races and can generate other examples.

In more math-y terms, we can say, take from the Wikipedia source.

Given an observable variable X and a target variable Y, a generative model is a statistical model of the joint probability distribution on X × Y, P(X, Y).

In contrast, a discriminative model is a model of the conditional probability of the target Y, given an observation x, symbolically, P(Y|X=x).

In dictionary terms, a generative model is:

Generative: relating to or capable of production or reproduction.

Model: a simplified description, especially a mathematical one, of a system or process, to assist calculations and predictions.

And in pop culture terms, generative models are the good stuff .....

Taxonomy link

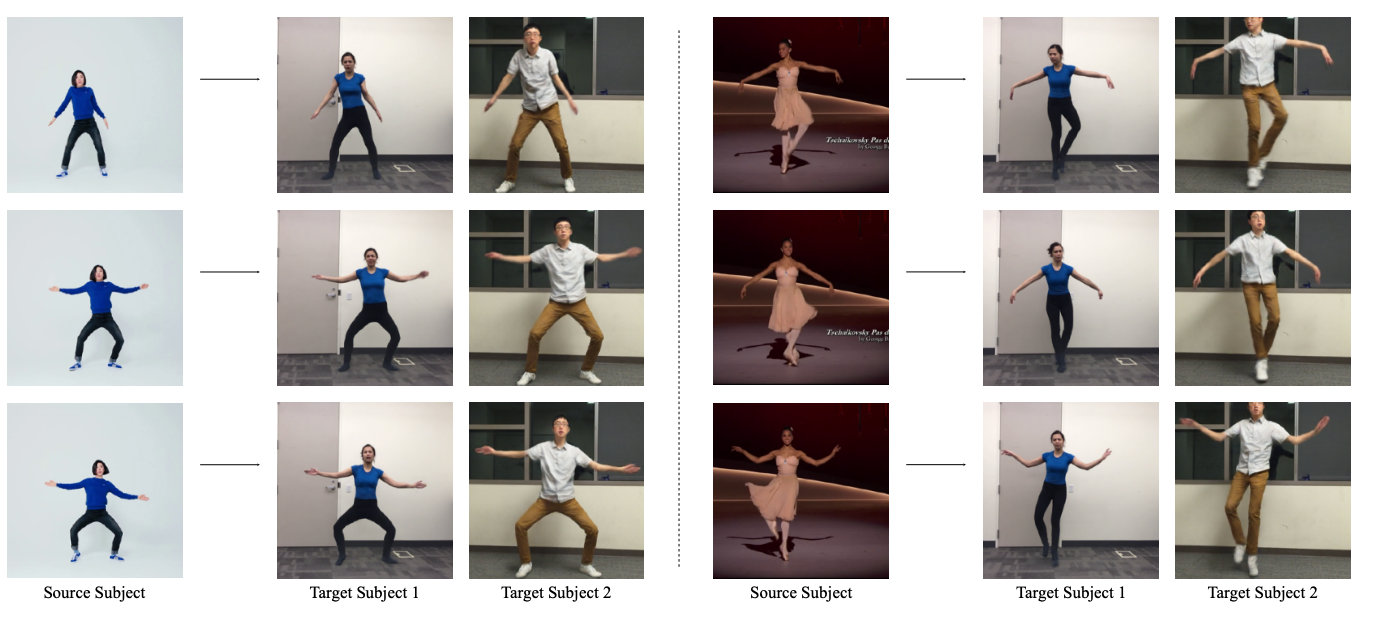

This taxonomy focuses on Generative Models that work via the principle of maximum likelihood. The first split between models is between those that use explicit vs implicit density functions. Up next week I will look at Explicit Density Generative Models - in particular my current fave the VAE (Variational AutoEncoder). Til then I highly recommend the Everybody Dance Now video that accompanies the paper - here.